The problem with run length encoding was that our chunk size (e.g. 4 bits) is fixed. This is nonoptimal.

With Huffman encoding, we can have variable length sequences to represent each character. However, we need a prefix free encoding. What this means is that if we represent A with 11, and D with 110, and F with 01, T with 0, we have an ambiguity. If we encounter a 1101, is this AF, or DT? A prefix free encoding ensures such an ambiguity cannot arise.

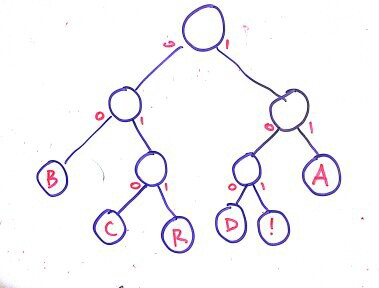

We can represent any prefix free encoding with a binary trie. All the characters are stored in leaves. The left branch represents a 0, and the right represents a 1.

As an example:

So while decoding, if we encounter a 011, we know this represents R.

This trie is created for all letters/symbols of the alphabet.

This is for decoding. How do you encode? A simple way is to create a symbol table with all your letters, with the values being the bit strings.

Again, note that for a given character, the size of the bitstring is variable.

Constructing the Prefix Free Encoding

- Scan through the whole text and note down the frequency counts for each character.

- Construct a node for each character: Make each of them a single-node trie. Give them a weight equal to the frequency.

- Select the two tries with minimum weights, and merge them to form a single trie with weight the sum of the two tries. By merging, I mean form a new root node, and put one trie as its left child, and the other trie as its right child.

- Recurse till you have only one trie.

Properties

- The text encoded by each bitstring is of fixed length.

- The bit string used to encode a piece of text (usually a single character) is of variable length.

- The cost to build this encoding is \(N+R\log R\) where \(R\) is the alphabet size.

- The compression is optimal (for single character encodings).

- You need the whole text before you begin encoding, so it’s not a good

idea for live communications.

- However, if we use the algorithm primarily to encode English text, we could just use a well known trie that utilizes the expected frequency counts of each letter.

- Need to communicate the prefix encoding. It is different for each text you compress, and the decompression side needs the trie you used to encode it.